This is the final installment of a short series of posts detailing what I’ve learned while exploring the possible directions that a redesigned Doomsday renderer could take. This post is about integrating the new renderer into the existing engine.

Importing levels from Doom/Hexen formats

Like it is with the Doomsday 1 renderer, the original levels stored in WAD files are not directly usable for rendering. DOOM levels are authored as polygonal sectors with lines representing walls, and the original Doom renderer is based on a 2D BSP where the sectors have been split into convex subsectors plus related pieces of walls. At runtime, the BSP is traversed to quickly sort the wall segments in those subsectors in front-to-back order. However, this way of doing things is not that relevant when going in with the assumption that the entire level is already in GPU memory. For larger levels, it is still beneficial to roughly split the level to chunks and sort them to avoid unnecessary overdraw, but by and large the GPU is powerful enough to draw all the triangles of the map without additional culling. If culling is necessary due to an excessively complex map, it is still best to do it entirely on the GPU using indirect draws. I haven’t yet explored this direction very far, but ultimately it will depend on how much additional detail gets added to the surface meshes.

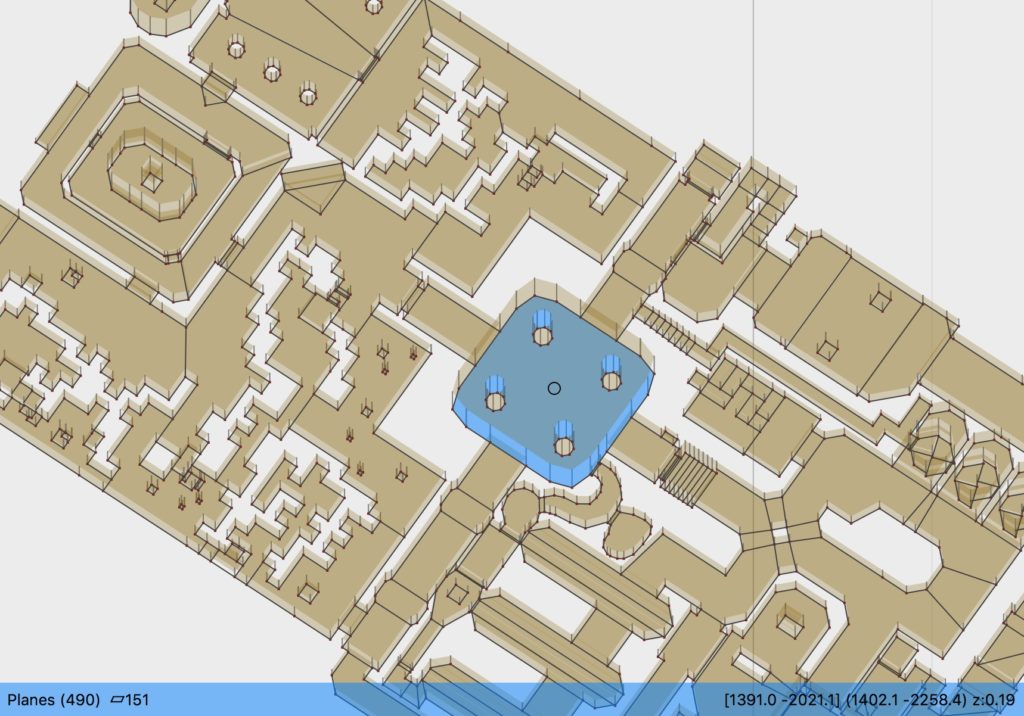

So, what exactly is needed for rendering? A triangulation of each sector’s planes and walls is still required (i.e., converting the polygons to a corresponding set of triangles). Walls are rather trivial, but triangulating arbitary polygons is a different matter: one needs to account for polygons with arbitrary holes inside, and single edge points that connect to multiple lines. Fortunately, this problem has already been studied for decades so there are several solutions available. In the end, I ended up writing my own routines that import the sector polygons from a WAD file and go through various manipulations to end up with a clean set of sector polygons and triangulations (i.e., a triangle mesh that covers the sector area).

The nice part is that this does not actually require creating any new vertices; unlike the subsectors produced by BSP construction, sector divisions can use the corner points themselves for subdividing the polygon. The end result is a smaller number of vertices and triangles. In other words, the importer can rely on the original representation of the level as created by the level author, and ignore the BSP tree and other such derived data. (The BSP data is still useful for gameplay routines, though.)

Although I already have the level importer up and running, there are still details that need some fine-tuning. For example, there are special flags that control how wall textures get positioned, and that behavior needs to be replicated in the shaders when calculating texture coordinates for materials. The trickiest part here is getting everything to match the original software renderer, particularly when it comes to emulating the various hacks and quirks there are for creating certain special effects. These will have to be detected during the importing phase so that corresponding geometry can be generated without the renderer actually having any knowledge of this special behavior.

Map packages

The Doomsday 2 package system plays an integral part in the new renderer. The package system was designed to simplify and modularize finding and loading resources. It will be used here to act as the sole delivery mechanism to feed data to the renderer: everything from level geometry to textures will be contained within one or more packages.

The D1 renderer grew rather organically (without knowledge of the future, naturally), so the internal boundaries did not form cleanly: for example, the way textures are loaded is convoluted, and the logic spans many subsystems from the file system code to the image loader. In a clean architecture, components are isolated from each other and require minimal knowledge of each other to function — interfaces are small. For the D2 renderer, this is a central concern: it needs no knowledge of how id Tech 1 textures are found and loaded, for instance; it just needs to be given images and coordinates — this is the core reason why my exploration work has been done in isolation, separate from the old engine; so that it is easier to rethink and re-evaluate the necessity of every detail and focus on the minimal required set of functionality.

In practice what happens is that the map importer writes a map package using the same format that a manually-authored Doomsday 2 map package would use. One immediate advantage here is that map packages can be cached to avoid repeated processing so the importing process does not need to be repeated. When the game loads a particular level, the renderer is set up simply by loading the corresponding map package. Internally, this greatly simplifies the APIs needed for the renderer, since there is no need to programmatically build and configure the renderer data structures. Everything can be handed over in a self-contained package.

Assets like materials, textures, shaders, and scripts can be embedded in the map package, or shared from other packages (e.g., one package for all textures imported from DOOM.WAD). This provides an unambiguous way to handle PWAD custom textures, too. Even 3D model assets can be embedded in map packages because they use the same package system that the 3D model renderer is already using. This is useful for both surface decoration models and customized objects.

In the cases where data from the original WAD levels is still needed (like the aforementioned 2D BSP), contents of the original unmodified WAD lumps can be included as separate files inside the map package, where the data can then be loaded easily. Some of this data can also be regenerated on-the-fly, too, but the importer should rely on the original data as much as possible.

Next steps

Perhaps the biggest missing feature that I still want to explore is global illumination. DOOM maps often have large rooms and areas that are lit in an ambient fashion, or by large luminous surfaces, so it is important to have the lighting behave in the appropriate volumetric way in these spaces. I have a good feeling about using the GPU for processing this lighting data (in a semi-dynamic fashion), but it will take some work to find the correct approach.

Currently the new rendering code resides in a completely separate test application, but eventually it will be integrated into the main Doomsday executable. I have already split the new renderer into a library of its own, to keep its dependencies clean, so the rest of Doomsday can pretty much treat it as a black box that is given a map package to display. The “classic renderer” will probably be removed completely in a future version — time is better spent improving the new renderer. It may actually be quite a challenge to remove all the old rendering code, as there may be some unexpected internal dependencies and side-effects.

While the 3D model renderer is already using shaders and PBR materials, it will still need some updating to integrate nicely with the new renderer (e.g., draw to the G-buffer with separate colors, normals, etc.). This will be pretty trivial to implement, but existing custom 3D model shaders will also need tweaking.

I’m currently targeting OpenGL 3.3 Core Profile for compatibility with older GPUS, but at some point in the future I intend to also look into Vulkan support. However, this is more of a long-term plan. The graphics code has been written in such a way that the underlying graphics API is abstracted away during normal use, so switching it shouldn’t be a massive challenge. Vulkan would provide opportunities for optimizing performance and doing async compute operations (e.g., for global illumination).

Finally, I’m still eyeing ports for Android, iOS, and Raspberry Pi. At its core, the new renderer is much better aligned with these mobile platforms as it relies on static data wherever possible. However, the shaders will need to be ported to OpenGL ES and likely simplified somewhat when it comes to the more advanced techniques. For example, I don’t expect displacement mapping to be feasible on mobile GPUs any time soon, particularly given the relatively high screen resolutions.

Conclusion

I hope you have enjoyed this short series about rendering! It was a blast exploring this stuff during the first half of the year, and it is a large part of why I haven’t paid much attention to the stable builds recently. There is a number of nice improvements in the 2.1 unstable branch that I will likely end up pushing out as a stable build sooner or later. However, many of the planned improvements for 2.1 will almost certainly be postponed to a later release… I will need to reorganize the Roadmap a bit once again so it’s aligned with my current thinking, particularly when it comes to integrating the new rendering code.